Personal Prelude

When I first started working in education, the term for ensuring consistent assessment was 'verification.' It felt precise, almost clinical. “Internal Verification” seemed positively unpleasant. Later, the terminology shifted to 'moderation’, a word that, for me, always carried a hint of something more nuanced, perhaps even something human. Yet, as I delved into the practicalities of the process, particularly in vocational education assessment, I often found myself looking for that 'human element' amidst the checklists and procedures. After all, nobody gets into education simply to fill in paperwork; we want to help and bring on the next generation.

However, despite being more comfortable with the name of the process, a vital question lingered for me: is moderation, however robust, just a process? All too often, moderation—despite its inherent good intentions—can get bogged down in a bureaucratic morass. When this happens, the paperwork and procedures (the means) inadvertently become the end themselves, losing sight of the genuine educational benefits. My argument, however, is that by actively focusing on the broader "educational good" that moderation serves, the very nature of this "box-ticking" process can itself be transformed, becoming an essential vehicle for positive change. It was this search, this personal reflection on the nature of our collective work, that led me to consider how moderation mirrors ideas of reflective practice – not just individual reflection, but a powerful form of group reflection. And from that, to broader philosophical concepts via Aristotle’s Nicomachean Ethics, and the idea that moderation, particularly through its feedback loops, can truly be a route to 'the good' – becoming an educational ethic rather than merely an education management function. This active potential of moderation is crucial; when it degrades into a mere administrative exercise; it fails to grasp its own transformative power.

Introduction

This is not a ‘how to’ post. There are hundreds of those just a Google away. Instead, I want to begin by considering how moderation both diachronically and synchronically can improve standards. In a sense considering what moderation does (or can/could do) before progressing to consider what moderation is and why in order to capture the transformative power of moderation it has to break away from a closed managerial function.

Moderation: The 3-Way Pivot for Continuous Improvement

Moderation, particularly on an unsuccessful individual candidate's performance, extends far beyond that individual. It serves as a 3-way pivot for continuous improvement, directing feedback and insights across three crucial types of standards: educational, performance; assessment. But beyond this three-way pivot within the standards principle, moderation also critically pivots to the foundational educational principles of validity, reliability and fairness, underscoring its role as a truly remarkable and vitally important procedure.

When moderation highlights common areas of weakness across multiple components of an assessment or specific deficiencies in an apprentice’s performance, this feedback is invaluable for the college. It can inform improvements in teaching methodologies, the curriculum’s emphasis on certain topics, and the overall instructional design. This continuous feedback loop is essential for maintaining and elevating educational standards over time. It is feedback now for improved performance tomorrow. By ensuring that the curriculum remains current, teaching methods are effective, and the emphasis placed on certain features of the course genuinely lead to the required levels of competence.

The direct feedback given to the apprentice about their unsuccessful performance, clearly articulated, becomes a vital part of their preparation for a resit or retake. It pinpoints exactly where they went wrong and what specific skills or knowledge need further development. But the feedback that moderation provides to improve educational standards can also provide a benefit when colleges provide remedial training for candidates prior to the retaking of the assessment. Effective moderation thus establishes what might be called a two-track feedback model which helps improve the performance standards associated with the assessment.

This notion of moderation providing different routes to improvement can also be found in the way that moderation can be utilised to improve assessment standards. Moderation inherently scrutinizes the assessment itself. If the moderation process reveals ambiguities in the assessment task, inconsistencies in marking guides, or issues with the clarity of instructions, this feedback directly improves the quality and fairness of future assessments. This ensures that the assessment accurately measures the intended learning outcomes.

Crucially, the internal verifier’s role in monitoring patterns of mistakes across different apprentices in the same assessment is key to prompting reflective actions regarding the educational and assessment standards. By reviewing these patterns, perhaps on a quarterly basis and reported in pre-arranged, standardized meetings/consortia, where assessors and college leaders can determine if an apprentice’s mistakes are truly individual or, more significantly, evidence of underlying weaknesses in the training provided or the assessment design itself. There will always be a requirement for full-scale reviews of the assessment and the assessment process but moderation provides continuous professional monitoring of the standards. This systematic analysis transforms individual assessment outcomes into valuable data for continuous improvement across the entire vocational programme. To borrow an electrical metaphor, moderation is the equivalent of ongoing maintenance in contrast to periodic inspections.

Beyond Apprentice Performance: Moderating Assessor Performance

While the initial verification process focuses on the apprentice’s performance, a truly comprehensive moderation strategy must also consider assessor performance. This requires a sensitive and supportive approach, particularly given industrial relations considerations.

The best way to address assessor performance within moderation is through a collaborative decentralisation of the moderation process.

Assessors should regularly engage in peer review of each other’s judgments. This involves assessors critically examining each other’s marking and feedback against agreed standards. This collaborative approach fosters a shared understanding of criteria and helps to identify unconscious biases or inconsistencies in application without being punitive. It also, because no-one is perfect, induces a recognition of professional humility. Indeed, moderation is the antidote to perfectionism.

This, in turn, provides opportunities for the professional development of assessors. When patterns in assessor marking are identified, these become opportunities for targeted training, workshops, or one-on-one support, rather than criticism or disciplinary action. The goal is to enhance their understanding of assessment practices and standards.

Education Scotland emphasizes that "engaging in the moderation process with colleagues will assist you in arriving at valid and reliable decisions on learners' progress" and promotes a "shared understanding of standards and expectations" among practitioners across all sectors. This aligns with the collaborative and transparent principles of moderation.

Moderation meetings, especially those involving the internal verifier, should include calibrated discussions where assessors can collectively review samples of work and discuss their rationale for marks and feedback. This open dialogue helps to align individual interpretations with the shared understanding of standards.

Ultimately, what emerges from these best practices is that moderation is effectively professional group reflection. It's a structured and collaborative process where assessors, internal verifiers, and indeed the entire vocational education institution engage in a cycle of learning and improvement. Much like theories of reflective practice, moderation moves beyond simply "doing" assessment to actively "reflecting on" and "reflecting in" the practice of assessment itself. It allows educators to collectively scrutinize their judgements, challenge assumptions, identify systemic issues in training or assessment design, and continually refine their approach to ensure that every apprentice receives fair, consistent, and high-quality assessment.

Moderation as Ethic

At the beginning of this post, I mentioned how the change from verification to moderation suggested a clear Aristotelian ethic. Aristotle saw "the good" as the aim of all human activity, (our telos) achieved through virtuous practice and the pursuit of excellence. In this light, moderation in vocational education can be seen not merely as an administrative process, but as a route to "the good" in education itself.

This pursuit of 'the good' finds a tangible parallel in industry standards. BS 7671 states that "good workmanship shall be used", (134.1.1). But it's not just worth considering what the Wiring Regulations state, but where they state it. The positioning in the very first part of the book underscores that "good" is a pervasive standard – a responsibility woven into the very fabric of electrical installation work. It is there because it is only by aiming at the good from the very beginning that we might ultimately hope to arrive there.

The Regulation’s simple yet powerful directive applies not just to the apprentice or qualified electrician, but extends as a guiding principle to the assessor and the organization that the assessor belongs to. It underscores that pursuit of "the good" is fundamentally that which connects the various components of vocational education. Just as in electrical installation, so too is the pursuit of the good a responsibility woven into the very fabric of training, assessment, and educational professional practice.

It's almost possible to regard moderation as the single most important process of the whole learning and assessing field. It is the process that ensures that the teaching, learning and assessing operate at their most effective. When moderation is practiced with integrity and a focus on continuous improvement, it cultivates:

- A Good Educational Standard: Ensuring that what is taught at college truly equips apprentices with the necessary knowledge and skills.

- A Good Assessment: Guaranteeing that assessments accurately and fairly measure competence, providing a clear pathway for learners to demonstrate their abilities.

- A Good Performance: Supporting apprentices to develop the practical skills and theoretical understanding required to excel in their chosen trade.

These elements all combine to produce "good electricians" who are not only technically proficient but also ethically grounded in their practice. In this sense, moderation, particularly through its two-track feedback mechanisms (direct from the assessor and systemic insights via the college), elevates itself beyond a management function to become a profound educational ethic – a commitment to excellence and the holistic development of competent, skilled, and responsible individuals in the workforce.

Sources & Further Reading:

Aristotle, tr.D. Ross, (2009) Nicomachean Ethics, Oxford, Oxford World's Classics, Oxford University Press

BS 7671:2018+A2:2022, Requirements for Electrical Installations, IET Wiring Regulations Eighteenth Edition, (2022) IET, London

Image Credit

Aristotle, photographer Nick Thompson, Flickr, Uploaded on March 31, 2012, https://www.flickr.com/photos/pelegrino/6884873348, CC-BY-NC-SA 2.0

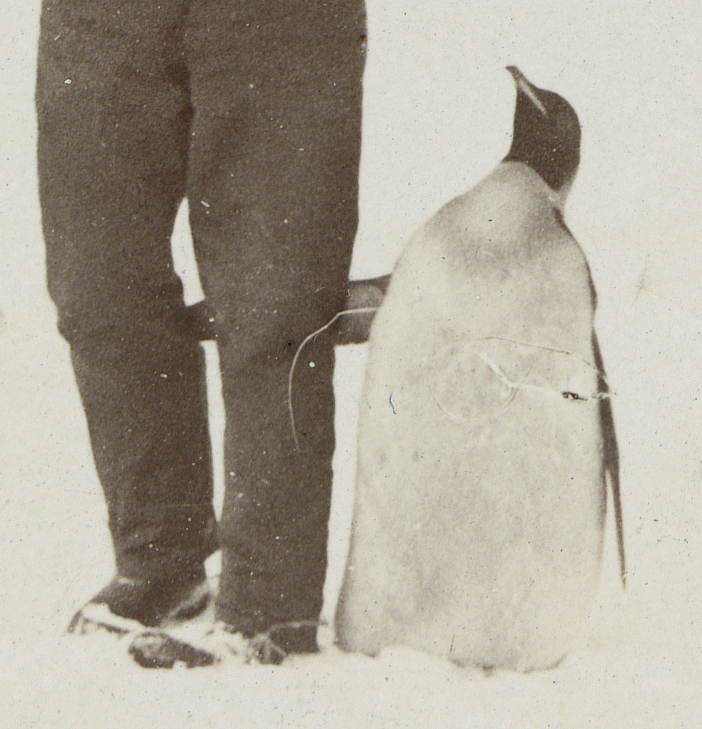

so we get to share possibly the greatest penguin pictures of all time from the Papers of William Speirs Bruce held by

so we get to share possibly the greatest penguin pictures of all time from the Papers of William Speirs Bruce held by